China: Experts call for international cooperation to regulate the use of AI in armed conflict

On July 4, 2025, the International Committee of the Red Cross (ICRC) and the Institute of International Relations at Tsinghua University (TUIIR) co-hosted a panel on “Challenges and Opportunities of the Use of AI in Armed Conflict” as part of the 13th World Peace Forum in Beijing.

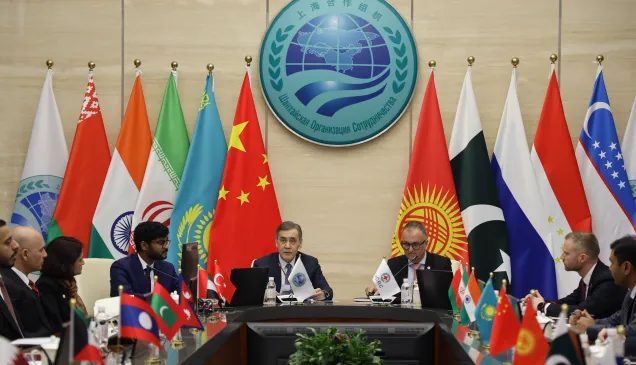

Experts and scholars from China, Europe and the United States, as well as representatives from humanitarian organizations and government institutions, engaged in in-depth discussions on the transformative impacts of AI on modern warfare and international security, its ethical and humanitarian implications, and the urgent need for effective global legal regulations.

Panel on Challenges and Opportunities of the Use of AI in Armed Conflict

Held from July 2 to 4 under the theme “Advancing Global Peace and Prosperity: Shared Responsibilities, Benefit, and Achievement”, the 13th World Peace Forum brought together global policymakers, academics, and international organizations to explore how international cooperation can support peace, development and security.

AI is transforming warfare, posing new challenges to IHL

Emerging technologies are reshaping the conduct of hostilities, making it increasingly complex. In today’s rapidly evolving security landscape, the integration of AI in military operations has raised, legal ethical and humanitarian concerns.

Baltharsar Staehelin, Personal Envoy of the ICRC President to China and Head of the Regional Delegation for East Asia, chairs the panel discussion.

“AI’s integration in warfare is redefining combat, introducing advantages and profound complexities at the same time.” emphasized Balthasar Staehelin, Personal Envoy of the ICRC President to China and Head of the Regional Delegation for East Asia, in his opening remarks.

He stressed the need for the international community to ensure that belligerents use AI pursuant to their obligations under international humanitarian law (IHL). For humanitarian actors, the concern is to minimize the risks of the use of AI inadvertently causing any harm to the populations they seek to protect and assist.

AI is influencing and accelerating military decisions in warfare—often in ways that exceed human cognitive capacity—thereby increasing risks to civilians. The ICRC holds that when states employ AI in life-and-death decision-making, meaningful human control and judgment must be preserved. Legal obligations and moral responsibilities in war must never be outsourced to machines or software.

Professor Gregory Gordon of Peking University’s School of Transnational Law examined the challenges AI systems face in complying with fundamental principles of international humanitarian law (IHL), such as distinction, proportionality, and precaution. He argued that current legal frameworks are insufficient to regulate such systems and called for the development of binding international rules.

A double-edged sword: AI in military operations and humanitarian response

While delving into legal frameworks, panelists also emphasized the need to balance the benefits and risks of AI in practical applications—especially its roles in military operations and humanitarian assistance.

Bruno Angelet, Ambassador of Belgium to China, referenced the EU Artificial Intelligence Act, which came into force on August 1, 2024. As the world’s first comprehensive AI regulation, the Act clearly aims to “promote the uptake of human-centric and trustworthy artificial intelligence.” Ambassador Angelet stressed that the human-centric approach must extend to the military use of AI, where human accountability for decisions of war must remain paramount.

In this video, Mauro Vignati, advisor on new digital technologies for warfare at the ICRC, explains how robotics, artificial intelligence and cyber capabilities are rapidly transforming today’s battlefields. He highlights that as conflicts increasingly shift into the digital sphere, protecting civilians depends on sustained dialogue with technology leaders, regulators and governments shaping this new environment.

Mauro Vignati, Adviser on New Digital Technologies of Warfare at the ICRC, illustrated how the humanitarian sector leverage AI to reinforce humanitarian efforts while reining in its adverse impact on populations already impacted by armed conflict. He pointed out that AI presents the opportunity of groundbreaking tools for humanitarian sectors, from facilitating the identification of missing persons to mapping violence patterns. Yet these innovations carry possible risks – to data privacy, to human rights, and to accountability – demanding rigorous ethical safeguards, as outlined in the ICRC AI policy - the “do no harm” principle should be applied all the time.

Panelists (from left to right): Balthasar Staehelin (Personal Envoy of the ICRC President to China and Head of Regional Delegation for East Asia, ICRC), Prof. Gregory Gordon (Peking University), H.E. Bruno Angelet (Ambassador of Belgium to China), Dr. Zeng Yi (Founding Dean, Beijing-AISI), Dr. Xiao Qian (Deputy Director, CISS, Tsinghua University), and Mauro Vignati (Adviser on New Digital Technologies of Warfare, ICRC).

A call for international cooperation to build a new framework for AI governance

Zeng Yi, Founding Dean of Beijing Institute of AI Safety and Governance (Beijing-AISI), emphasized the multidimensional challenges of AI governance, which require cross-sector and cross-border collaboration. He noted that China, like the European Union, advocates for a “human-centric” approach in its regulatory efforts. The country has introduced specific rules on generative AI and deep synthesis technologies and is developing related policy frameworks. Zeng stressed that China’s regulatory progress can complement efforts by the EU and the United States, and that these major actors should seek alignment rather than competition in shaping future global AI governance.

Xiao Qian, Deputy Director of the Center for International Security and Strategy (CISS) at Tsinghua University, highlighted that geopolitical tensions are delaying any global consensus on AI regulations and frameworks. According to her, there is a lack of public awareness on risks and challenges of the use of AI in military domain and efforts should be made to address this gap to ensure a more informed and nuanced public discourse that can become the basis for relevant policies and regulations.

As AI continues to evolve, presenting both opportunities and risks across all sectors—including the humanitarian field—the ICRC released its AI Policy in November 2024 to guide the responsible use of AI. Earlier this year, the ICRC submitted its input to the UN Secretary-General in response to General Assembly Resolution 79/239 on “Artificial intelligence in the military domain and its implications for international peace and security.” This submission aims to support States in ensuring that military applications of AI comply with existing legal frameworks and, where necessary, to identify areas requiring additional legal, policy, or operational measures. The ICRC continues to collaborate with States to address the challenges posed by new technologies of warfare.

Participants participate in Digital Dilemmas immersive experience.

To complement the panel discussion, the ICRC offered participants an immersive “Digital Dilemmas” virtual reality (VR) experience, illustrating the complex digital threats faced by civilians in conflict zones, ranging from misinformation and surveillance to biometrics misuse, AI risks, and disrupted connectivity.

The panel offered valuable insights and proposed pathways towards addressing the challenges and opportunities presented by AI in armed conflict, including a refreshing idea on AI evolving to a point that it could prevent the irresponsible use of technology by humans in conflict. Participants agreed that AI’s role in warfare will continue to be a pivotal concern for international security and left the session with much food for thought. To harness AI’s potential responsibly, States must deepen cooperation and urgently develop binding regulations that ensure AI technologies contribute to peace, uphold humanitarian principles, and promote sustainable development—rather than exacerbate conflict.