The Shaping of International Rules on AI and AI Governance: Some Humanitarian Considerations

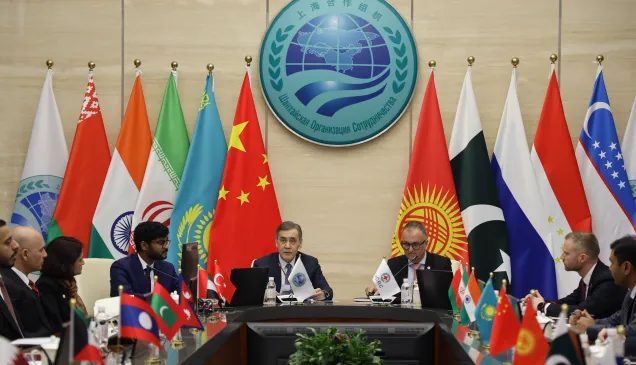

Presentation of Balthasar Staehelin, Personal Envoy of the President and Head of Regional Delegation for East Asia of the International Committee of the Red Cross, at International Rules in Cyberspace Forum, 2025 World Internet Conference Wuzhen Summit, 9 November 2025.

Thank you for the kind introduction. I am honoured to be included in this esteemed forum, so please allow me to first express my sincere gratitude to the organizers of this forum.

Before getting into my presentation, I would like to briefly introduce the organization that I work for, the International Committee of the Red Cross, or ICRC in short, is an impartial, neutral and independent organization whose exclusively humanitarian mission is to protect the lives and dignity of victims of armed conflict and other violence and to provide them with assistance. For more than 160 years, the ICRC has worked in numerous wars and armed conflicts, bringing assistance to those who are most in need.

The unique mandate of the ICRC to operate in wars and conflicts gives us the first-hand experience to witness how civilian population is being affected by these situations, this has influenced our contribution to the development of international law and these field observations serve as the basis for my current presentation.

Today, I will highlight some key humanitarian considerations for the development of international rules on AI and AI governance.

First, I want to talk about the use of AI in autonomous weapon systems. In the ICRC’s understanding, autonomous weapon systems (or AWS in short) are weapon systems that select and apply force to targets without human intervention. This means, after initial activation or launch by a person, AWS can select target and launch attack on its own. With the development of AI technology, AI can certainly be used in AWS to identify and select targets. In other words, today the capability exists to completely delegate life and death decisions to machines with little to no human intervention.

Therefore, it is no wonder that AWS raises serious humanitarian, legal and ethical concerns. Can a kill decision made by a machine comply with international humanitarian law? What if the machine is wrong, who is responsible and accountable for the violation of the law? IHL requires human judgement and human determination in military decision-making because the sanctity of human life is not a decision a machine can make judgment calls on. The human cost of conflict, often being the civilian lives lost and civilian objects destroyed, are real life consequences that should never be reduced to statistics, codes and algorithms.

To address these concerns, the ICRC has urged States to establish international rules on AWS. In particular, international rules should stipulate prohibition on unpredictable AWS and the use of AWS to target human beings. So far, discussion about international rules on AWS is still ongoing, for example in the UN process. Last year, the ICRC president and UN Secretary General have made a joint appeal to call for the negotiation of legally binding international rules on AWS by 2026.

Secondly, I now want to turn to the use of AI in the military domain more broadly, such as in the military planning and decision-making more generally. Indeed, in contemporary armed conflict there have been military use of AI-decision-support-systems, which are computerized tools that bring together data sources (such as satellite imagery, sensor data, social media feeds) and present analyses, recommendations or predictions based on them. For example, AI-decision-support system might analyse drone footage and apply image-classification technology to identify and classify potential targets in armed conflicts. AI-decision-support systems have the effect of speeding up conflict from human speed to machine speed, which carries significant risks.

Another worrying issue is around the use of AI to direct decision-making on the use of nuclear weapons, or other weapons of mass destruction, potentially exacerbating the risks of use of nuclear weapons with the catastrophic consequences that would result.

From a humanitarian perspective, the use of AI-decision-support system may create additional risks for civilians and other protected persons in armed conflict. This could be due to a variety of reasons, such as the inherent “black box problem” of how AI works, the fact that AI is not suitable to all the tasks in the battlefield, but also the bias of AI. That is not to say that AI is not helpful in supporting humans in making decisions. But AI should never replace human decisions especially in the military domain. This emphasis on human control and human judgment should be an overarching principle for the shaping of international rules on AI.

Although I have talked about risks associated with AI, but AI also brings a lot of opportunities in the humanitarian field. As China’s Global AI Governance Action Plan noted, “AI presents unprecedented opportunities for development, and it also brings unprecedented risks and challenges”. In the ICRC, for example, AI has been used to support our humanitarian action and yielded positive result.

I believe the shaping of international rules will be a key aspect in promoting the use of AI in compliance with international law as well as ethical and humanitarian values. AI, like any tool in the world, is shaped by its user, and its user is guided by laws and regulations.

The AI regulation debate is currently ongoing in multiple forums from UN processes to regional and bilateral forums, which is very promising. However, there is no denying that the discussions and negotiations are happening at a much slower rate than the innovation in the field. It is also important that the discussion doesn’t only focus on peaceful use of AI while ignoring the reality of the use of AI in the military domain which could have devastating impact on civilians. Collectively we must bring humanity to the centre of the AI discussion. The development of international rules on AI and AI governance should always uphold a human-centred approach to make sure that humanitarian considerations are adequately addressed in all circumstances.

Thank you.

See also:

Adopting a Human-centred Approach to the Development and Use of AI