What you need to know about autonomous weapons

What is an autonomous weapon?

Autonomous weapon systems, as the ICRC understands them, are any weapons that select and apply force to targets without human intervention.

A person activates an autonomous weapon, but they do not know specifically who or what it will strike, nor precisely where and/or when that strike will occur.

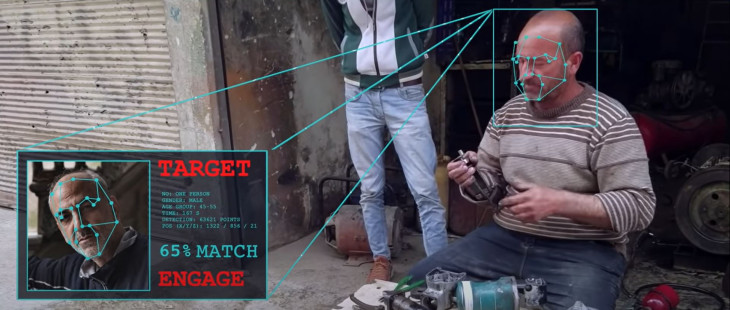

This is because an autonomous weapon is triggered by sensors and software, which match what the sensors detect in the environment against a 'target profile'.

For example, this could be the shape of a military vehicle or the movement of a person. It is the vehicle or the victim that triggers the strike, not the user.

Our concern with this process is the loss of human judgement in the use of force. It makes it difficult to control the effects of these weapons.

How can the user know when they activate the weapon whether the shape that triggers the strike will in fact be a military vehicle and not a civilian car?

Even if it does strike a military vehicle, what about civilians who may be in the vicinity at that moment?

When will these weapons come into existence?

Mines can be considered rudimentary autonomous weapons. The serious harm they have caused to civilians in many conflicts – because their effects are difficult to control – is well documented. And it led the international community to ban anti-personnel mines in 1997.

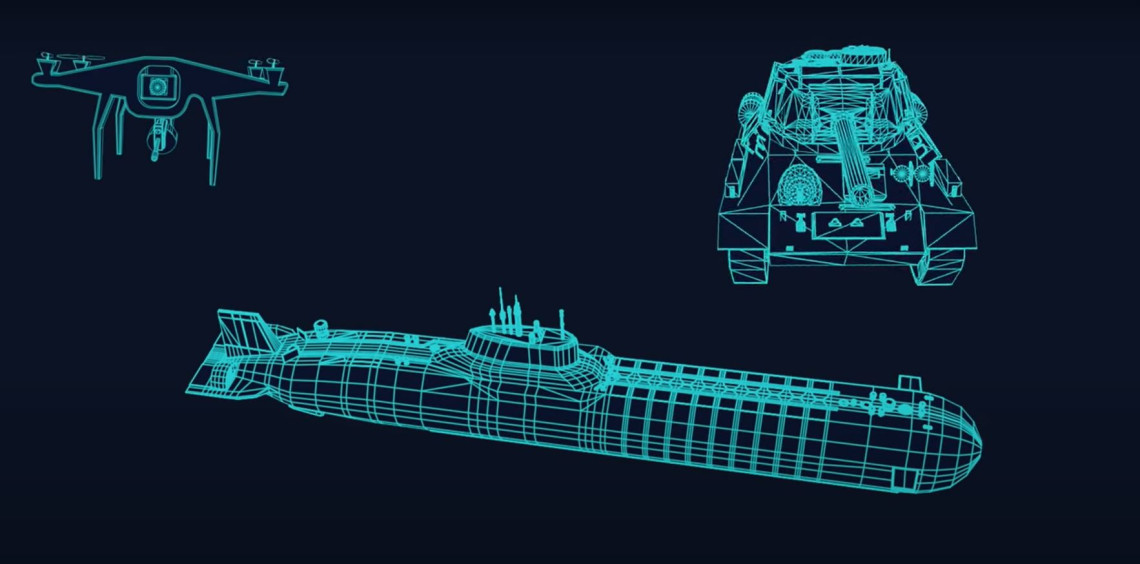

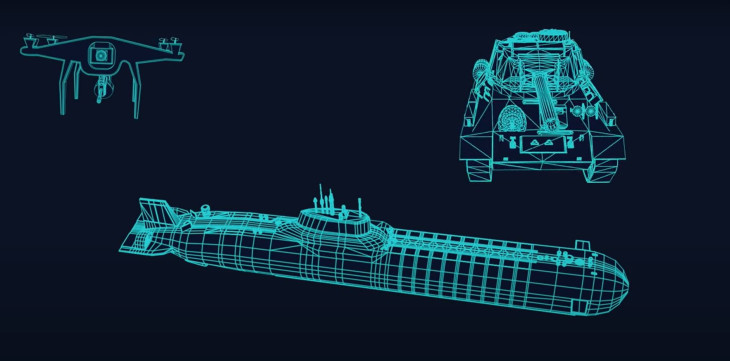

Other types of autonomous weapons have also been developed, but these tend to be used in highly constrained circumstances only. Examples include air defence systems that strike incoming missiles and some loitering munitions, developed to destroy military radars, tanks or armoured vehicles.

To date these autonomous weapons have generally been used against clear-cut military targets – weapons and munitions, military radars and enemy tanks – in areas where there are few civilians or civilian objects.

They also tend to be under tight human supervision with the ability to switch them off if the situation changes or something unexpected happens.

But weapon technologies and practices are changing fast. Militaries and weapon developers are interested in integrating the autonomous use of force in a wider variety of weapons, platforms and munitions, including armed drones that are currently remote-controlled by human operators. Worryingly, there is interest in using autonomous weapons to target humans directly.

Why is the ICRC concerned about autonomous weapons?

To put it simply, they pose humanitarian risks, legal challenges and ethical concerns due to the difficulties in anticipating and limiting their effects.

As mentioned above, they increase the dangers facing civilians, while soldiers no longer taking part in the fight, in other words those who have surrendered or are injured, also face greater risks.

Autonomous weapons can also accelerate the use of force beyond human control. While speed might be advantageous to militaries in some circumstances, when uncontrolled it risks escalating conflicts in an unpredictable manner and aggravating humanitarian needs.

Legal challenges

International humanitarian law requires combatants carrying out a specific attack to make context-dependent, evaluative legal judgments.

The way autonomous weapons function – where the user does not choose the specific target or the precise time or location of a strike – makes this difficult. Under what conditions could users of an autonomous weapon be reasonably certain that it will only be triggered by things that are indeed lawful targets at that time and will not result in disproportionate harm to civilians?

Autonomous weapons also raise challenges from the perspective of legal responsibility. When there are violations of international humanitarian law, holding perpetrators to account is crucial to bring justice for victims and to deter future violations. Normally investigations will look to the person who fired the weapon, and the commanding officer who gave the order to attack.

With the use of autonomous weapons, who will explain why an autonomous weapon struck a civilian bus, for example?

There are many questions about whether alleged perpetrators of war crimes could be held responsible under existing legal regimes.

Ethical concerns

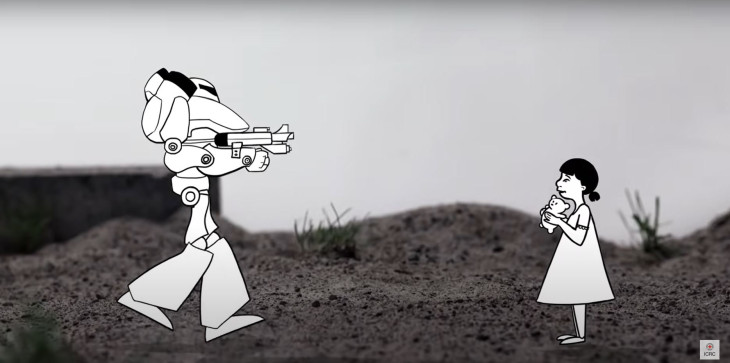

Most fundamentally, there are widespread and serious concerns over ceding life-and-death decisions to sensors and software.

Humans have a moral agency that guides their decisions and actions, even in conflicts where decisions to kill are somewhat normalized.

Autonomous weapons reduce – or even risk removing – human agency in decisions to kill, injure and destroy. This is a dehumanizing process that undermines our values and our shared humanity.

All autonomous weapons that endanger human beings raise these ethical concerns, but they are particularly acute with weapons designed or used to target human beings directly.

What is the role of AI and machine learning in autonomous weapons?

There is increasing interest in relying on AI, particularly machine learning, to control autonomous weapons.

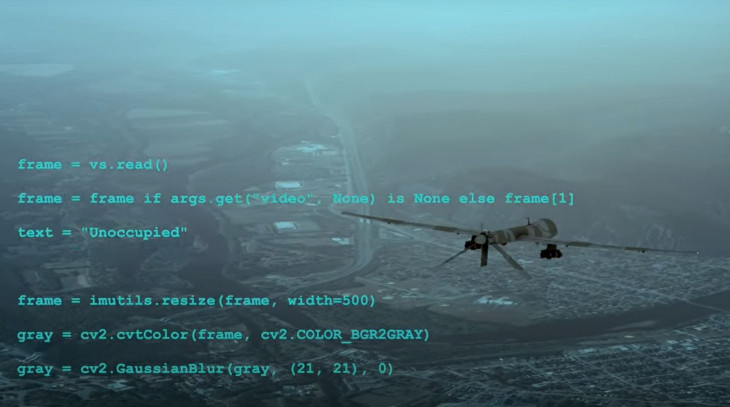

Machine learning software is 'trained' on data to create its own model of a particular task and strategies to complete that task. The software writes itself in a way.

Often this model will be a 'black box' – in other words extremely difficult for humans to predict, understand, explain and test how, and on what basis, a machine-learning system will reach a particular assessment or output.

As is well known from various applications, for example in policing, machine learning systems also raise concerns about encoded bias, including in terms of race, gender and sex.

With all autonomous weapons it can be very difficult for a user to predict the effects. As mentioned above, the user may not even know what will trigger a strike. Machine learning-controlled autonomous weapons accentuate this concern. They raise the prospect of unpredictability by design.

Some machine learning systems continue to 'learn' during use – so called 'active', 'on-line' or 'self- learning' – meaning their model of a task changes over time.

Applied to autonomous weapons, if the system were allowed to 'learn' how to identify targets during its use, how could the user be reasonably certain that the attack would remain within the bounds of what is legally permissible in war?

What does the ICRC recommend that governments and others do to respond?

The ICRC has recommended that states adopt new legally binding rules on autonomous weapons. New rules will help prevent serious risks of harm to civilians and address ethical concerns, while offering the benefit of legal certainty and stability.

First, unpredictable autonomous weapons should be prohibited. That is autonomous weapons that are designed or used in a manner such that their effects cannot be sufficiently understood, predicted and explained – including those that 'learn' targets during use and perhaps machine learning-controlled autonomous weapons in general.

Second, autonomous weapons that are designed and used to apply force against people directly should be prohibited.

Third, there needs to be strict restrictions on design and use of all other autonomous weapons to mitigate the risks mentioned above, ensure compliance with the law and address ethical concerns.

As the guardian of international humanitarian law, the ICRC does not recommend creating new rules lightly.

But we are also committed to promoting the progressive development of the law to ensure existing rules are not undermined. We want to ensure the protections for those affected by conflict are upheld and, when needed, strengthened in the face of evolving weapons and methods of warfare.

Just as with anti-personnel landmines, blinding laser weapons and cluster bombs, we need a new legally binding treaty to protect civilians and combatants. Humanity must be preserved in warfare.

These rules could be set out in a new Protocol to the Convention on Certain Conventional Weapons (CCW), or another legally binding instrument.

So how optimistic is the ICRC that new rules will be adopted?

We are confident that states will agree new international rules on autonomous weapons. Most states recognise the need to ensure a measure of human control and judgement in the use of force, which will mean setting strict constraints on autonomous weapons.

An increasing number of states are ready to adopt specific prohibitions and restrictions. Others recognise the need for these limits even if they have not yet committed to new rules.

Some of those states previously opposed to new rules are showing greater openness, perhaps driven by deepened multilateral discussions as well as developments in recent conflicts. There are now credible and pragmatic solutions on the table for how to regulate autonomous weapons. Current military technology developments and practices make it urgent that these are taken up.

What is needed now is principled political leadership by states to bring that solution forward into a legally binding instrument that will protect people for a long time to come.